Improving AWS SDK latency on EKS

Table of Contents

- Introduction

- The Problem

- Basics on how AWS SDK performs authentication

- Connecting the dots

- Getting a fix

- Achievements

Introduction

At Rokt, we have services written in various languages that are deployed on different infrastructure. Over the last couple of years we’ve migrated our services onto Kubernetes. This blog post will walk through the findings and how we fixed a puzzling increase in startup and tail latency of one of our services caused by AWS SDK and how it performs authentication on EKS.

The Problem

To illustrate the issue, here is the high level sequence diagram of one of our affected applications:

The application is written in Go with AWS SDK for Go v1.34.23, which performs multiple AWS SNS Publish API calls in parallel. Latency of the overall request is dictated by the slowest call of the publish operation.

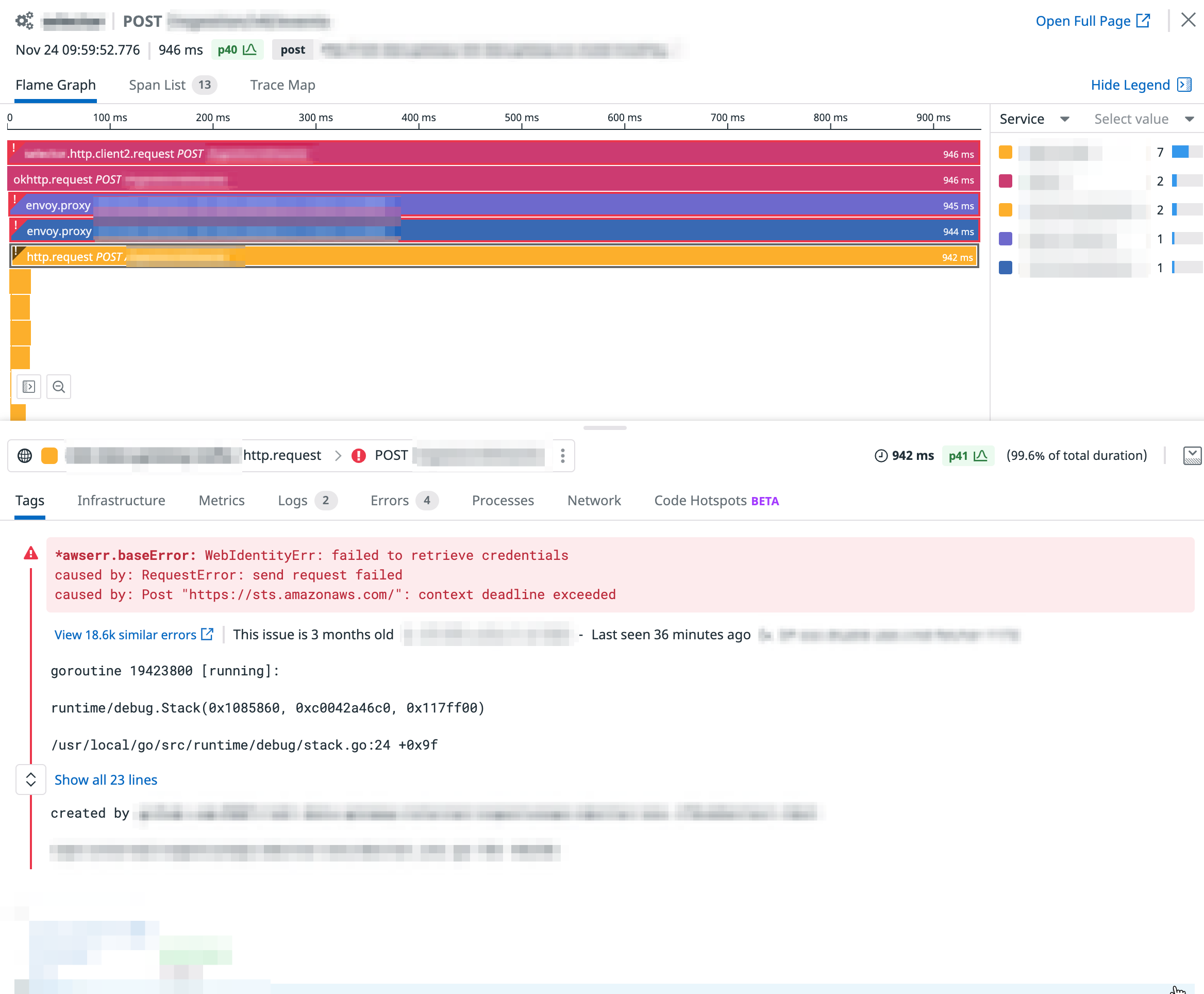

With APM tracing, we were able to identify that the increased latency came mostly from the additional AWS STS call made by the SDK clients during application startup:

Basics on how AWS SDK performs authentication

Before going into further detail, we need to establish some basic understanding of how AWS SDK performs authentication.

When an AWS API call is made, it’s fairly typical for that API request to be cryptographically signed to ensure that the request is coming from a legitimate caller. There are multiple signing processes for AWS, where the most commonly used one is the version 4 signing process. Credentials are required to initialize the signing process. To make life easier, the AWS SDK provides a default credential chain to help look up credentials from different places with various providers to accommodate multiple deployment scenarios.

Credential Providers

Here is the list of credential providers that can be commonly found in various language implementations of the AWS SDK. The Go SDK is used as an example here.

| Credential Provider | Note | Speed |

|---|---|---|

| Static Credential Provider | Credentials supplied via code | Very Fast: < 1ms |

| Shared Credential Provider | Parsing local file system | Very Fast: < 1ms |

| Environment Variable Provider | Credentials feed via OS environment variables | Very Fast: < 1ms |

| EC2 Role Provider | Typically used by EC2 Deployments. | Fast: <10ms |

| Endpoint Provider | Typically used by ECS Deployments. | Fast: <10ms |

| Web Identity Provider | Credentials fetched via STS | Slow: Could be 100ms+ |

Providers like Static, Shared & Environment providers are commonly used for local development / debugging due to their ease of use, while the rest of the providers are commonly seen in production-grade deployments.

Differences between EC2/ECS/EKS Credential Fetch Process

On EC2, credentials are fetched via the instance metadata endpoint. Due to the request being served locally, this call is typically very fast.

Things start to become a bit more complicated moving onto a containerized world. Unlike EC2 deployments where usually a single application occupies the whole instance, in a containerized world a single EC2 instance could have multiple applications running where each application could require a different set of IAM permissions.

ECS, being an AWS proprietary product, solves this by running an ECS Container agent, where it internally performs the credential fetching via credential manager, then exposes them via an endpoint where application containers could fetch credentials similar to those fetched from EC2. Due to the request being served by the container agent running on the same host, this call is also typically very fast.

EKS, a service aimed to be compatible with community driven versions of kubernetes, makes vendor specific solutions like ECS container agent not viable. Community driven solutions like kiam and kube2iam were useful before an official solution existed, but after AWS had implemented the IAM roles for service accounts, the community alternatives are no longer recommended due to their drawbacks (for example kiam requires a fairly wide IAM permission to allow it to assume roles on others behalf).

IAM roles for service accounts works by letting the AWS SDK within the application read the kubernetes service account token that is mounted on the Pod’s volume, then subsequently fetch the real credentials via STS AssumeRoleWithWebIdentity.

Due to a STS API call being required, this credential fetching process is significantly slower when compared to EC2/ECS. To make things worse, by default STS uses the global endpoint, meaning the call could be routed to a different AWS region with higher latency. This also poses higher operational risk when us-east-1 region has outages.

SDK Credential retrieval process

Credentials fetching is performed lazily, where CredentialsProvider.Retrieve() will only be invoked after the 1st API call is made by the client;

This is also a blocking process, where the time used to fetch credentials directly contributes to the whole request duration.

The following diagram depicts the sequence of events when an application makes a call to a vanilla AWS client for the 1st time.

SDK Credential expiry process

Temporary Credentials are commonly used to boost security. The AWS SDK will attempt to cache the credentials and provide an ExpiryWindow configuration to allow eagerly refreshing the credentials some time ahead of the actual expiry to avoid any false permission denied error.

However, this credential refresh process is still blocking, meaning the specific API request that triggered the expired credential refresh process will still suffer a latency penalty.

Connecting the dots

Now let’s connect the dots together. This application internally used multiple instances of the AWS SNS client.

After application startup, none of these clients had initialized credentials, and had to perform a credentials fetching process as outlined above. When the application is ported onto EKS, the STS call adds non-insignificant latency overhead to the API request.The tail latency increase came from the STS overhead when the credentials expired.

Here is effectively what happened during application startup:

Getting a fix

With the root cause identified and problem statement defined, now let’s work on a fix.

Goal of the fix

Before writing the code, let’s define what are desired properties the fix should contain:

- Should address the issue, by eliminating the application API latency increase caused by AWS credential initialization / refresh process;

- Should be easy to use, by having little intrusion to existing code base / be able to apply with very little change;

- Should be portable, able to function on different deployment environments without the need for an engineer to configure it;

- Should be robust, that the fix should fail gracefully and should not perform any worse compared to default sdk implementation;

Attempt #1

Our first attempt to pre-warm the SDK client after the application initialization solved the startup latency issue, but the tail latency issue remained.

We also tried overwriting methods in credentials.Credentials to change its behavior, only subsequently realizing this concrete type is being referenced directly (signer example) throughout the SDK code base (compared to referenced by interface to allow polymorphism), turning this idea into a dead end.

Attempt #2

After the first attempt failed, we needed to find a different solution to tackle this problem.

Reading the code

We took a step back to analyze what code paths are involved throughout the life cycle of a single API request.

Upon reading the code, it’s obvious that client.Client implements the basic client that is used by

all service clients, within which it contains request.Handlers that provides a collection of handlers for dealing with the request throughout its various stages.

The sign handler looks particularly interesting, by checking how service clients initialize the handler (using DynamoDB client as example here), we are able to find the handler that actually does the job (v4.SignRequestHandler).

Further checking the code, v4.SignRequestHandler is merely a package variable that eventually holds a reference to v4.SignSDKRequestWithCurrentTime, which conveniently is a variadic function that provides options to allow further customization of the underlying signer.

This is fairly promising and looks quite doable, the plan becomes:

- Find a way to load credentials on application startup;

- Find a way to asynchronously fetch credentials in the background before expiry;

- Find a way to replace credentials used by the signer with the ones fetched from previous steps;

Implementation

Let’s start by defining some interfaces. Here we define a CredentialProvider interface where most of the heavy lifting is happening (in its implementation that we will fill later).

// CredentialGenerator

// is a helper type to abstract fetching a **fresh** AWS credential

type CredentialGenerator func() (*credentials.Credentials, error)

// CredentialProvider

// is a helper interface to allow refreshing credentials

type CredentialProvider interface {

RefreshCredential(t *time.Ticker, stopCh <-chan interface{})

GetCredential() (*credentials.Credentials, error)

}Now we can provide a helper method to patch the signer and replace the credentials with the ones we supply.

// ReplaceAwsClientV4Signer

// replaces the default v4 signer with a new v4 signer

// that reads credentials from an instance of CredentialProvider

func ReplaceAwsClientV4Signer(credProvider CredentialProvider, awsClient *client.Client) {

signerOpt := func(signer *v4.Signer) {

// only replace cred if it can be acquired successfully

cred, err := credProvider.GetCredential()

if err != nil {

log.Printf("failed to read credential from provider. " +

"falling back to provider from chain: %v", err)

return

}

// replace credential with the one we provide

signer.Credentials = cred

}

v4SignerName := v4.SignRequestHandler.Name

// create a new signer

patchedSignHandler := v4.BuildNamedHandler(v4SignerName, signerOpt)

// replace the existing v4 signer in the SDK client

awsClient.Handlers.Sign.Swap(v4SignerName, patchedSignHandler)

}Now let’s fill in the implementation for CredentialProvider.

Given most of the time the cached credentials will only be read, and barely any writes (only when refresh happens), it makes sense to use atomic.Value to make the implementation mutex free yet still concurrent safe.

type CachedAwsCredProvider struct {

// caches the cred

v atomic.Value

// used to acquire a *fresh* cred

credGenFn CredentialGenerator

}

func NewCachedAwsCredProvider(credGenFn CredentialGenerator) *CachedAwsCredProvider {

return &CachedAwsCredProvider{

v: atomic.Value{},

credGenFn: credGenFn,

}

}

func (c *CachedAwsCredProvider) fetchAndCacheCred() (*credentials.Credentials, error) {

cred, err := c.credGenFn()

if err != nil {

return nil, err

}

_, err = cred.Get()

if err != nil {

return nil, err

}

c.v.Store(cred)

return cred, nil

}

func (c *CachedAwsCredProvider) RefreshCredential(t *time.Ticker, stopCh <-chan interface{}) {

for {

select {

case <-stopCh:

return

case <-t.C:

if _, err := c.fetchAndCacheCred(); err != nil {

log.Printf("failed to refresh credential: %v", err)

}

}

}

}

func (c *CachedAwsCredProvider) GetCredential() (*credentials.Credentials, error) {

v := c.v.Load()

// v is not initialized.

// fall back to fetch cred in sync

if v == nil {

return c.fetchAndCacheCred()

}

vCasted, ok := v.(*credentials.Credentials)

if !ok {

return nil, errors.New("should not happen. failed to cast cred")

}

return vCasted, nil

}Now let’s provide a few more auxiliary methods to complete the implementation. We provide CredentialGeneratorWithDefaultChain as a helper method to extract credentials using the default chain, so that the code will be fairly portable and will accommodate different deployment options. GetCredentialProvider is another helper method to force retrieving credentials on instance initialization, where it also sets up the async refresh in the background.

// CredentialGeneratorWithDefaultChain

// uses aws default credential chain to create credentials.

// STS regional endpoint is used to improve latency

//

// NOTE: a fresh session must be created each time

// to avoid its cache for *credentials.Credentials

var CredentialGeneratorWithDefaultChain = func() (*credentials.Credentials, error) {

s, err := session.NewSession(&aws.Config{

STSRegionalEndpoint: endpoints.RegionalSTSEndpoint,

})

if err != nil {

return nil, fmt.Errorf("failed to new aws session")

}

return s.Config.Credentials, nil

}

func GetCredentialProvider() CredentialProvider {

credProvider := NewCachedAwsCredProvider(CredentialGeneratorWithDefaultChain)

// force getting cred on initialization

_, err := credProvider.GetCredential()

if err != nil {

panic(err)

}

// refresh cred async

// hard coded to fresh credentials every 10 min/non-stop

go credProvider.RefreshCredential(time.NewTicker(10*time.Minute), make(chan interface{}))

return credProvider

}To apply the patch, simply apply the helper methods onto the sdk clients.

func main() {

// initialize aws client as before

awsSession := session.Must(session.NewSession(&aws.Config{}))

snsClient := sns.New(awsSession)

s3Client := s3.New(awsSession)

// a few lines to acquire cred provider & patch sdk client

credProvider := GetCredentialProvider()

ReplaceAwsClientV4Signer(credProvider, snsClient.Client)

ReplaceAwsClientV4Signer(credProvider, s3Client.Client)

// ... continue

_ = snsClient

_ = s3Client

}Achievements

With the changes above we were able to achieve the original goal with the following highlights:

- 1st request latency penalty was removed as the app immediately tries to fetch credentials at startup;

- Credentials expiry penalty is also removed as the refresh process happens asynchronously;

- Credential fetching duration is reduced by forcing sdk to use regional STS endpoint;

- The change utilizes a public stable hook function exposed by AWS SDK so no changes to the SDK source code is necessary;

- The change is very easy to deploy as an additional single line of code;

- We are still utilizing the default SDK credential chain and no extra config is necessary for a different deployment environment;

- The application performance impact is minimal as we swap the credentials in lock free manner;

- Our code fails back gracefully to use the default AWS SDK behavior when an unexpected error happens;